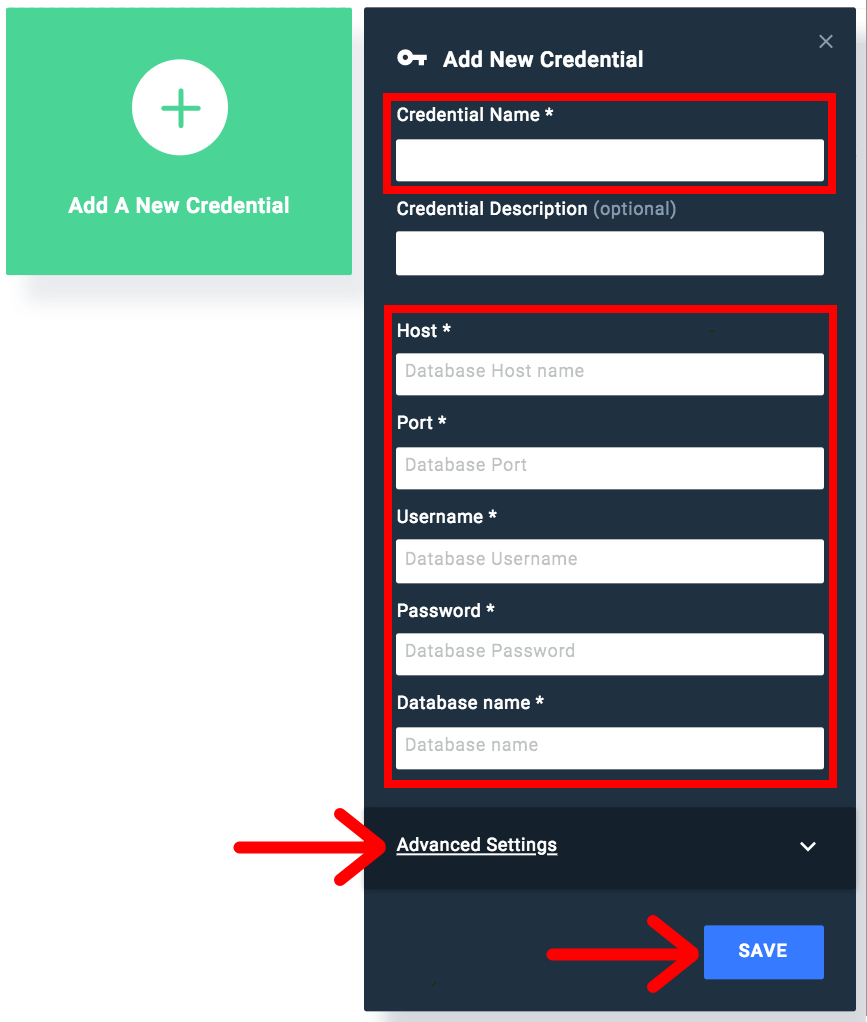

We recommend testing the connection, and then saving it by using the Save & Close button. Save & Close: This will allow you to save your connection and close the New Connection popup, returning you to the page you were on previously.Ĭancel: This closes the New Connection popup without saving your connection, returning you to the page you were on previously. If the connection was successful, a message will appear.Ĭreate View: This will save your connection and get you started on creating a view based on it. Test Connection: This will validate the connection parameters you provided to ensure it can connect to your database. Once you have completed your connection information, you will have several options:Īdvanced Connection Editor : This will take you to the Data Source page in the Admin Console and allow you to edit all the advanced options available for your database.

AMAZON REDSHIFT WIKI PASSWORD

But in case of multiple drivers, select the one you prefer.Įnter the username and password of your Amazon Redshift account.

AMAZON REDSHIFT WIKI DRIVER

The default driver required for this connection will automatically be selected.

The default TCP/IP port number for this data source will automatically appear.Įnter the name of the database containing the data that you require. Next, enter the name or IP address of the server hosting your instance of Amazon Redshift. Select the Include Schema in SQL checkbox to add the schema name when addressing database tables in SQL queries. In this post, we collect, process, and analyze data streams in real time. Additional configuration fields will appear. Amazon Redshift allows you to easily analyze all data types across your data warehouse, operational database, and data lake using standard SQL. Select Amazon Redshift from the list of available database types. When the New Connection popup appears, provide a name and description for your Amazon Redshift connection. Bufferįor Amazon S3 destinations, streaming data is delivered to your S3 bucket.Follow the instructions below to connect to your Amazon Redshift instance.Ĭlick on the Create button and then on Data Source. Period of time before delivering it to destinations. Kinesis Data Firehose buffers incoming streaming data to a certain size or for a certain For more information, see Sending Data to an Amazon Kinesis Data Firehose Delivery Stream. You can alsoĬonfigure your Kinesis Data Firehose delivery stream to automatically read data from an existing Kinesisĭata stream, and load it into destinations. Sends log data to a delivery stream is a data producer. Producers send records to Kinesis Data Firehose delivery streams. The data of interest that your data producer sends to a Kinesis Data Firehose delivery stream.

For more information, see Creating an Amazon Kinesis Data Firehose Delivery Stream and Sending Data to an Amazon Kinesis Data Firehose Delivery Stream. A GEOGRAPHY value in Amazon Redshift can define two-dimensional (2D), three-dimensional (3DZ), two-dimensional with a measure (3DM), and four-dimensional (4D) geometry primitive data types: A two-dimensional (2D) geometry is specified by longitude and latitude coordinates on a spheroid. You use Kinesis Data Firehose by creating a Kinesis Data Firehose delivery stream and The underlying entity of Kinesis Data Firehose. For more information aboutĪWS streaming data solutions, see What is Transform your data before delivering it.įor more information about AWS big data solutions, see Big Data on AWS. You can also configure Kinesis Data Firehose to You configure your data producers to send data to Kinesis Data Firehose, and it automaticallyĭelivers the data to the destination that you specified. With Kinesis Data Firehose, you don't need to write applications or manage Part of the Kinesis streaming data platform, along with Kinesis Data Streams, Kinesis Video Streams, and Amazon Kinesis Data Analytics.

Including Datadog, Dynatrace, LogicMonitor, MongoDB, New Relic, Coralogix, and Elastic. Amazon Kinesis Data Firehose is a fully managed service for delivering real-time streaming data to destinations such asĪmazon Simple Storage Service (Amazon S3), Amazon Redshift, Amazon OpenSearch Service, Amazon OpenSearch Serverless, Splunk, andĪny custom HTTP endpoint or HTTP endpoints owned by supported third-party service providers,

0 kommentar(er)

0 kommentar(er)